Or as I'd put it, you can't program a computer to deal with stupid. Stupid is infinite. People don't always drive with caution or obey rules of the road. You can't program for what you cannot foresee.

Excellent example of car using Tesla Autopilot:

[The driver of a Tesla Model S killed in a crash west of Williston, Florida, while using the car's autopilot feature was speeding, according to a report from the National Transportation Safety Board.

Released Tuesday, the preliminary report determined that the Tesla was traveling at 74 mph on a Florida highway with a 65 mph speed limit when it struck a truck pulling a semitrailer. "System performance data also revealed that the driver was operating the car using the advanced driver assistance features Traffic-Aware Cruise Control and Autosteer lane keeping assistance," the report reads. "The car was also equipped with automatic emergency braking that is designed to automatically apply the brakes to reduce the severity of or assist in avoiding frontal collisions."

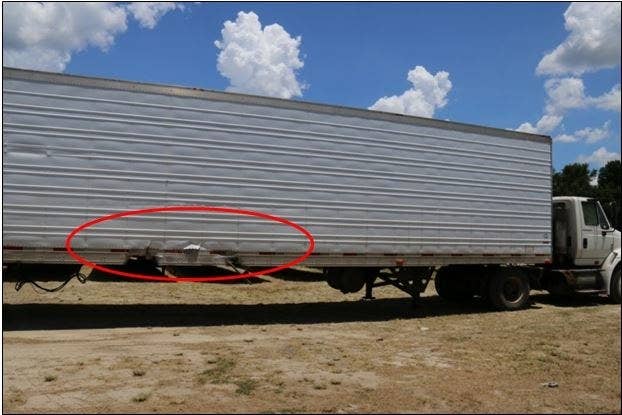

"Traffic-aware cruise control" and "autosteer lane keeping assistance" are Tesla's terms for the components that make up its autopilot, a driver-assist feature that the company calls an incremental step toward self-driving cars. When a driver turns on traffic-aware cruise control, he or she sets the speed. The car then adjusts speed according to traffic conditions.]Unclear from the photo below, also from the Buzzfeed article linked above whether the truck in the picture below pulled out or turned in front of the Tesla. In either case, the result came out the same. The driver died. The "automatic emergency braking" feature failed, evidently.

https://www.buzzfeed.com/priya/driver-in-fatal-tesla-autopilot-crash-was-speeding

National Transportation Safety BoardWhere the Tesla hit the semitrailer.

[But not so fast, says MIT professor Jonathan How. “It’s going to take a long time… There’s going to be an expectation where you see cars at more autonomous levels than Tesla, for example, in that time frame, but they will only start to appear in limited deployments.”

Steven Shladover, of University of California Berkeley’s PATH (Partners for Advanced Transportation Technology) program, agrees: “One might be able to drive in an autonomous vehicle in a very limited set of conditions ... but to get to a vehicle that can get everywhere, that people can ride is all sorts of weather conditions, is not something that’ll happen in my lifetime," he says. "And I don’t think it’ll happen in your lifetime, either.

Self-driving cars may not take over our roads by 2020, or even close to that, but they will become more and more prevalent in, How believes, “limited deployments,” like in certain cities, campuses, and airports. And while the world’s biggest tech and automotive companies run real-world tests to make autonomous driving a reality, these robot cars are going to drive slowly, cautiously — and make us a little carsick — until they can learn to drive like humans.] https://www.buzzfeed.com/nicolenguyen/not-too-fast-not-too-furious-self-driving-uber-atg

In real life, people run red lights. In Orlando, FL--where I lived--green lights meant little as people always seemed to try and make it through intersections at the last nano second of yellow traffic lights.

https://www.buzzfeed.com/carolineodonovan/heres-a-video-of-a-self-driving-uber-running-a-red-light

Not even the smartest smart car can predict a careless driver trying to make it across a 3 lane road when the first 2 lanes have stopped; although to practice safety on the road one ought to stop where the other 2 lanes did and peek around for someone trying to sneak across the road, which the autonomous Uber car in a Tempe, AZ, crash did not:

https://www.azcentral.com/story/money/business/tech/2017/03/29/tempe-releases-police-report-uber-crash/99797486/

So y'all wait for your utopian future of self driving cars on autopilot; as for me, I'll put my faith in my faulty fellow humans--and toll collectors.

No comments:

Post a Comment